A Generative Adversarial Network for AI-Aided Chair Design

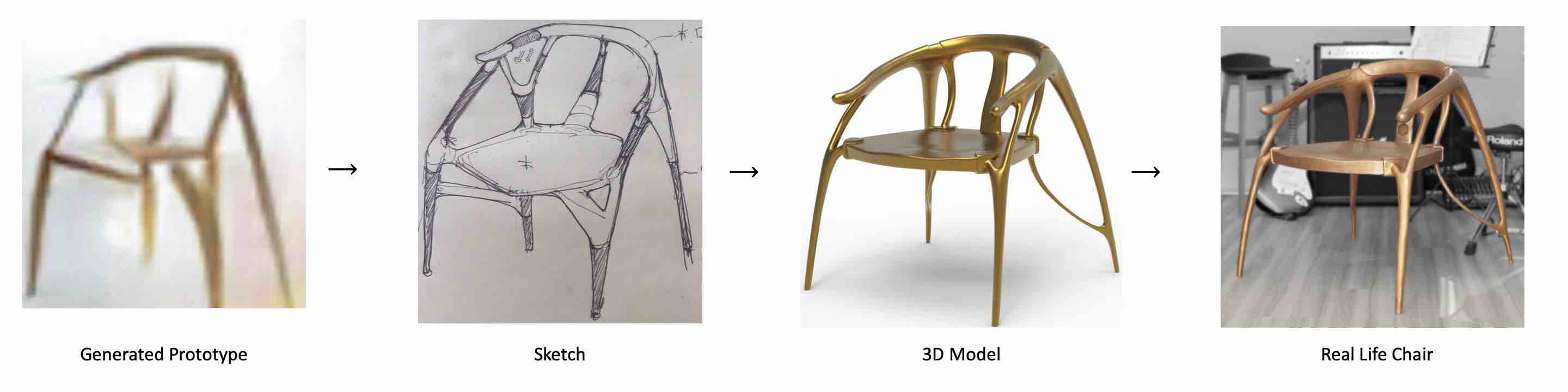

We present a method for improving human design of chairs. The goal of the method is generating enormous chair candidates in order to facilitate human designer by creating sketches and 3d models accordingly based on the generated chair design. It consists of image synthesis module, which learns the underlying distribution of training dataset, super-resolution module, which improve quality of generated image and human involvements. Finally, we manually pick one of the generated candidates to create a real life chair for illustration.

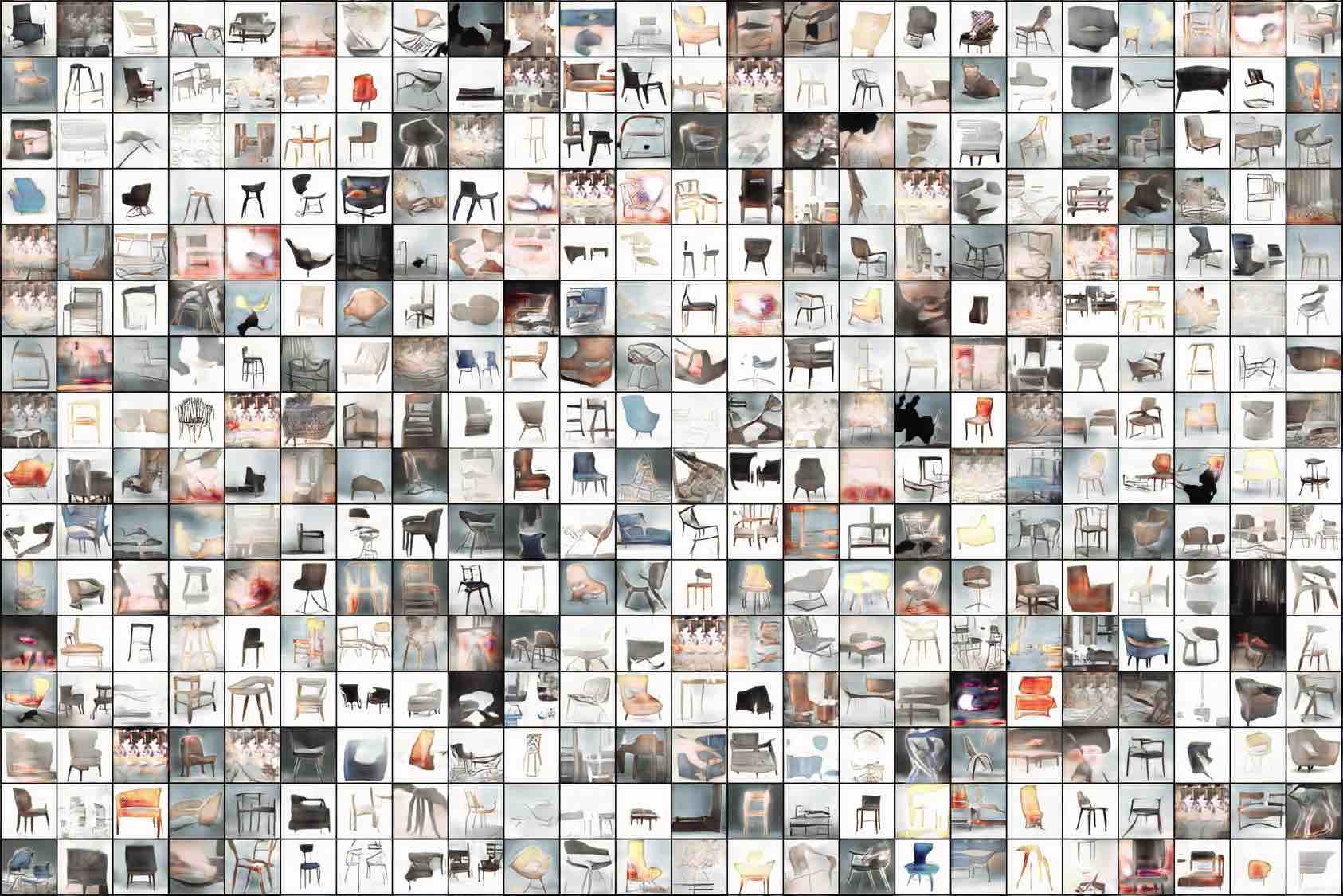

Dataset Around 38,000 chair images scraped from Pinterest for image synthesis module. We downscale the images by a factor of 4 for fine-tuning the image super-resolution network.

We generate 320,000 chair design candidate at

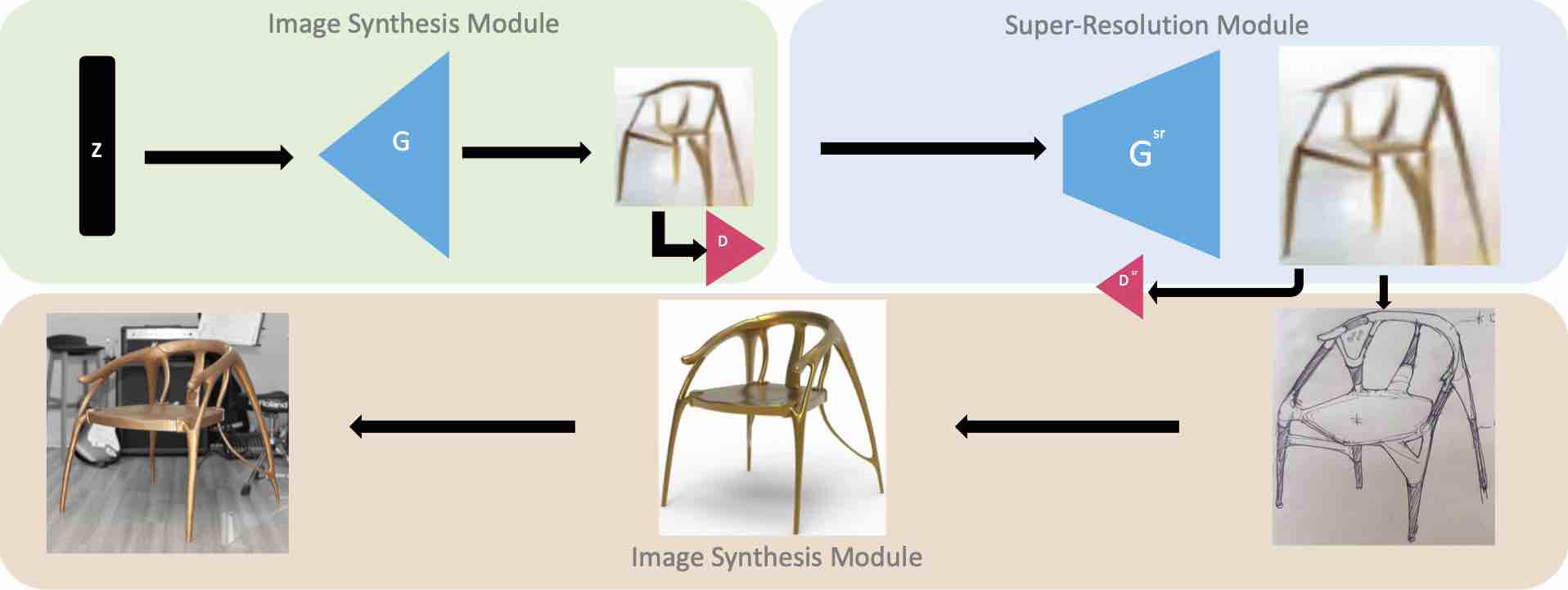

Network Architecture We present a deep neural network for improving human design of chairs which consists of image synthesis module, super-resolution module.

For image synthesis we adopt DCGAN architecture whose generator consists of a series of 4 fractional-strided convolutions with batch normalization and rectified activation applied. And the discriminator has a mirrored network structure with strided convolutions and leaky rectified activation instead. For super-resolution we adopt the architecture of SRGAN with

Image Synthesis Module

For generator

Where

Super-resolution Module

We apply adversarial loss and perceptual content loss to generate higher resolution images. Let

The difference between a high resolution image

Where

Training We used 2 GTX1080Ti GPUs to train image synthesis module for 200 epochs with mini-batch size of 128, learning rate of 0.0002 and Adam with

Result

We select one of the candidates as design prototype and create a real life chair based on it. To the best of our knowledge, this is the first physical chair created with the help of deep neural network, which bridges the gap between AI and design.